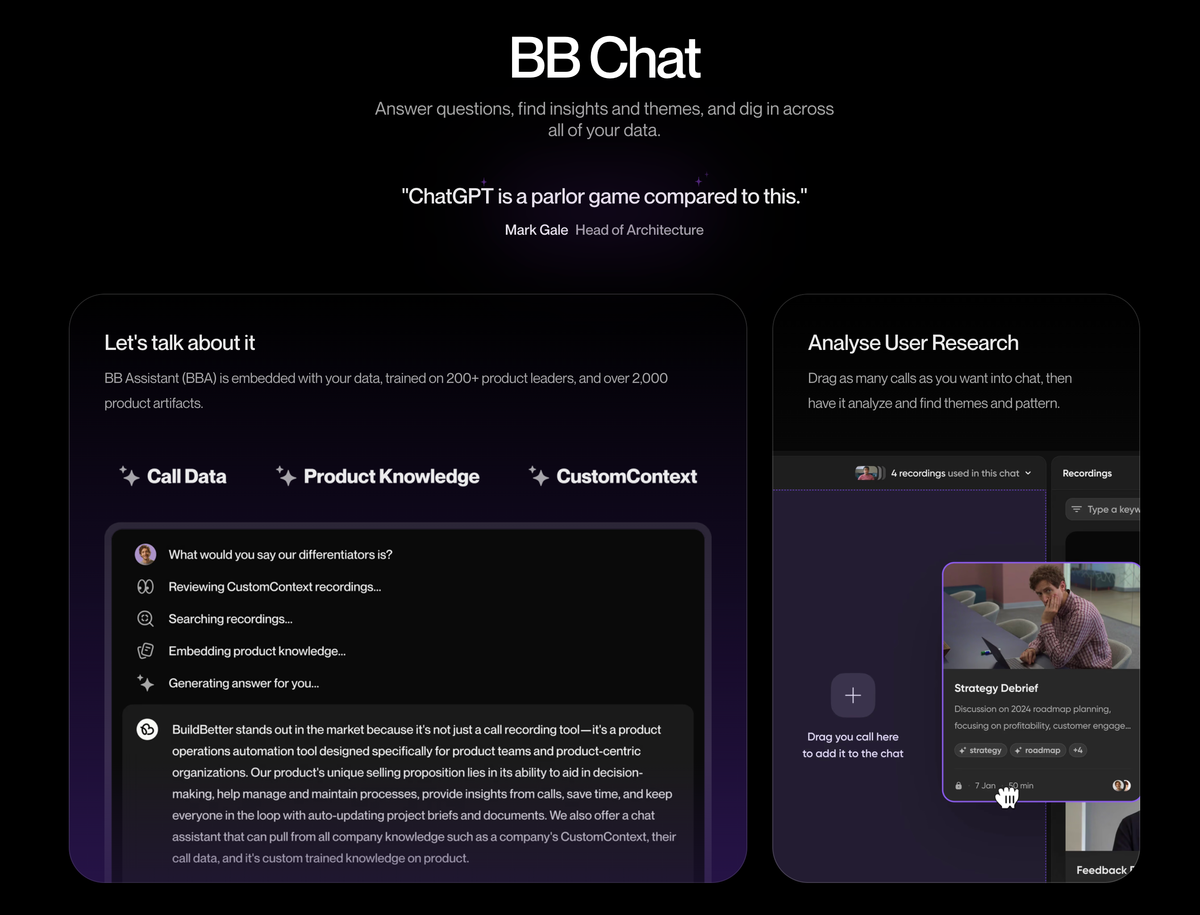

We added major improvements to chat quality and performance

Today, we released enhancements to the chat experience that significantly increase the quality of the responses by improving back-end prompting, new proprietary tools given to the LLMs that allow better querying within your workspace data, and more verbose reasoning and interaction.

The BuildBetter chat experience can now fully deliver on the promise from the BuildBetter landing page by quickly answering the age-old product management question:

What are your customers asking for that your product team isn't working on?

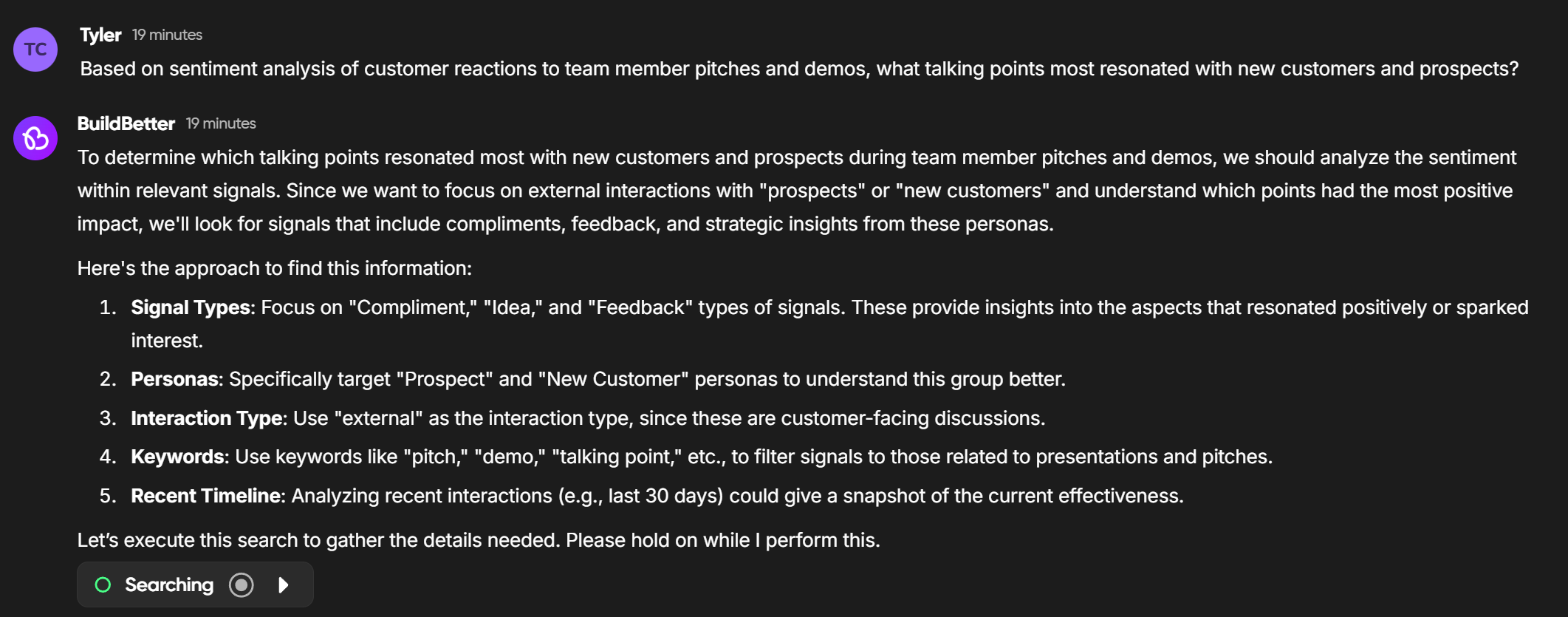

Customer Beta testing also showed impressive results with a variety of prompts, producing accurate, consistent, and sourced responses that were all highly relevant, contextual, and entirely spot-on with what the PMs themselves were seeing first-hand.

"What are the the most common feature requests and pain-points from customer onboarding calls? Can you rank them by severity and frequency of occurrence?"

"Identify the top 10 reasons prospects choose not to use BuildBetter based on objections from sales demos. Match these to planned roadmap items"

"Based on sentiment analysis of customer reactions to team member pitches and demos, what talking points most resonated with new customers and prospects?"

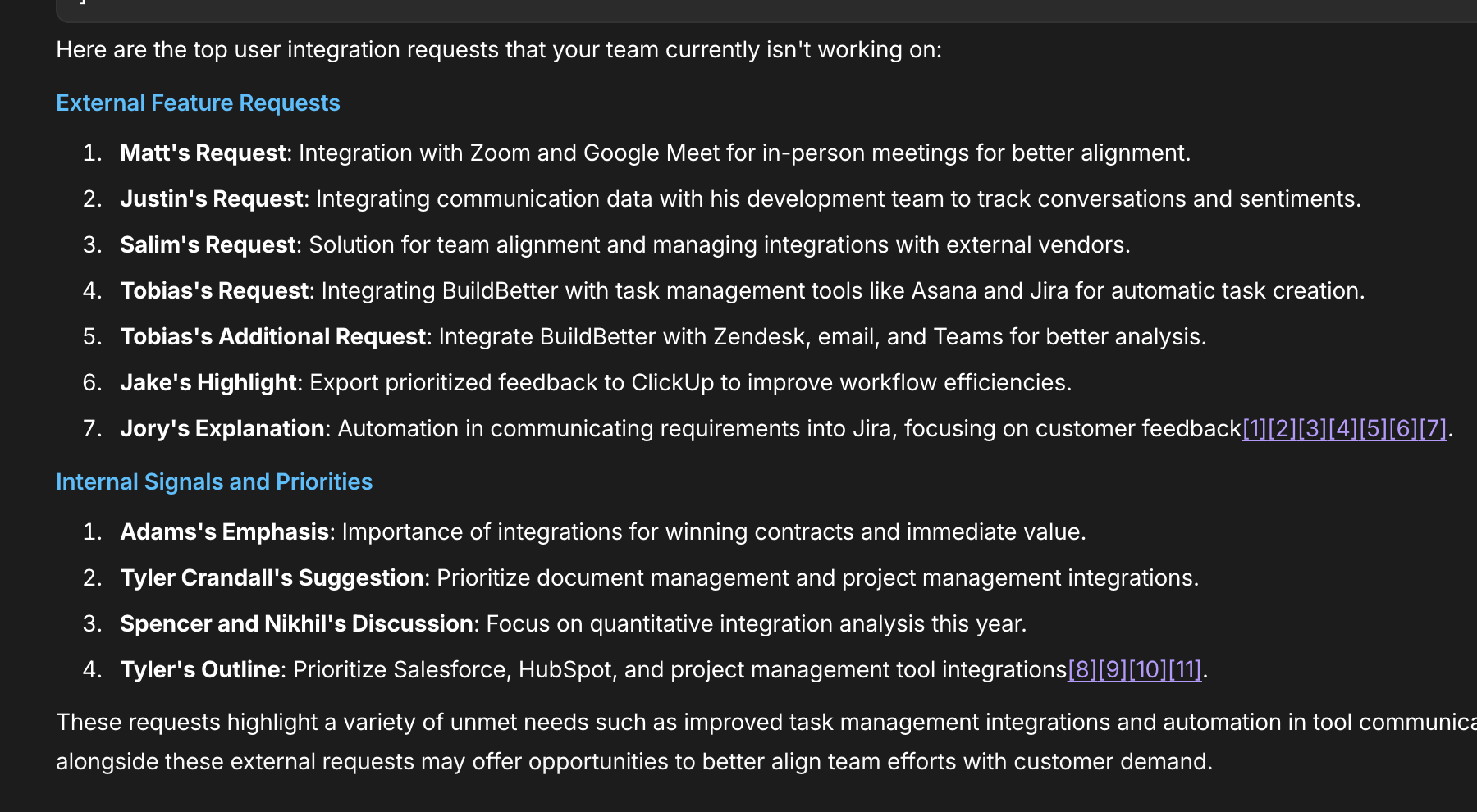

The new "tools" given to the Chat LLMs allow the models to work through the same steps a human PM would follow in using the available data.

- First, it will parse the question the user is asking to determine what the user is trying to achieve and will ask for more context or clarification, if needed.

- It will then determine which of the available tools will best help it solve the problem (Should it review transcripts or summaries? Should it look for specific documents you've written? Are there relevant Signal insights, if so, which type match? What keywords and topics are relevant?)

- It then applies filters to aid in it's search based on parameters from the prompt. It can look for types of Signals, call source, person or company, persona, etc.

- It will then format a response and ask for any follow-up input from the user.

Not only do these results clearly show a high degree of usefulness, quality, and relevancy, the chat performance has been dramatically improved. In some cases, the response times are 10x faster, or more.

The new logic behind the scenes of these improvements also paves the way and serves as the basis of more autonomous workflows, experiences, and agents that we'll be sharing more on very soon.

There's nothing needed from users to get started, it's already been released to all workspaces, regardless of tier.